Simon Fell > Its just code > Web Services

Tuesday, December 3, 2013

There's a big update to zkSforce available, it now supports the entire v29 Partner API, all the new calls, soap headers etc. Also there are aysnc/blocks versions available of all the API calls, so you can safely make soap calls on a background thread and not block the UI.

The update is mostly driven from a new tool I wrote which generates code from the WSDL, so as new WSDLs get released keeping zkSforce unto date will be easier. (note you don't need to use this tool, just add zkSforce to your project as usual and off you go). This move to generated code means there are a number of minor changes from previous versions that you might need to address if you update to the latest version, check the read me for all the details.

If you try it out, let me know how you get on.

Sunday, November 2, 2008

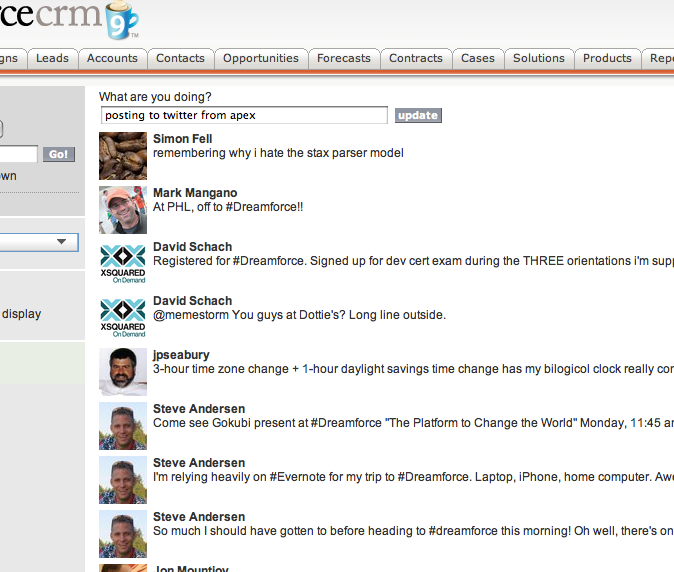

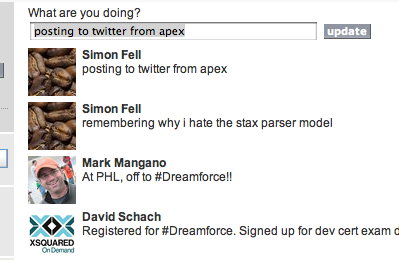

I've been trying out the Twitter API from Apex code, turns out to be reasonably straight forward, i was able to put together a little client class that can get your friends timeline, and to post new updates (the rest of the api is basically a matter of typing at this point). Using this means you can post twitter updates with just 2 lines of apex code

TwitterForce t = new TwitterForce('someUsername', 'somePassword');

t.postUpdate('@arrowpointe working on it (this from apexcode)', '985929063');

TwitterForce t = new TwitterForce('someUsername', 'somePassword');

List<TwiterForce.Status> statuses = t.friendsTimeline();

Rebuilding the twitter UI in VF is not that interesting, but being able to programatically interact with Twitter from apex code is. Here's the code, it includes the TwitterForce client class, some tests, the demo VF controller, and demo VF page. remember you'll need to add http://twitter.com/ to your org's remote sites for the API calls to work. Enjoy, and see you at #dreamforce. apex Twitter API.zip

Monday, September 8, 2008

Just like i described yesterday for the API, you can do exactly the same foreign key resolution using external Ids in Apex code, e.g.

Case c = new Case(subject='Apex FKs');

Account a = new Account(extId__c='00001');

c.account = a;

insert c;

Sunday, September 7, 2008

One of the tedious things about integrating data across systems is mapping keys, e.g. your account master system is pushing data into Salesforce.com, both systems have their own beliefs on what the primary key for one of those accounts is. One of the things Salesforce.com has supported for a while is the concept of external Ids, these are custom fields in Salesforce that you've said are a primary key in some other system. By setting this up, you can delegate all of the key match drudgery to the Salesforce.com infrastructure. Most people know about the upsert function, which allows the caller to create or update a row based on an external Id value, rather than the Saleforce.com primary key. But wait, order in the next 5 minutes and we'll throw in the ability to resolve foreign keys as well, this much less understood feature allows you while calling create/update/upsert, to use external Ids to resolve foreign keys as well. Keeping with our account master example, you may have a system that needs to create Cases in Salesforce, and it only know's the account Master accounts Id, and not the salesforce account Id. To use this, rather than passing the FK itself, you populate the relationship element, and populate its externalId field. Is harder to explain than to show an example, e.g. this C# code will create a new case, related to an account, where we only know one of the accounts external identifiers.

static void createCase(sf.SforceService s, String accExtId, String caseSubject) {

sf.Case c = new sf.Case();

c.Subject = caseSubject;

sf.Account a = new sf.Account();

a.extId__c = accExtId;

c.Account = a;

sf.SaveResult sr = s.create(new sf.sObject[] { c })[0];

if (sr.success)

Console.WriteLine("New case created with Id {0}", sr.id);

else

Console.WriteLine("Error creating case : {0} {1}", sr.errors[0].statusCode, sr.errors[0].message);

}Note that rather than populating the AccountId field on case with the Salesforce.com Account's Id, we populate an Account with its extId__c value instead. Because you can have multiple external ids on a particular object, this nested object is used to tell Salesforce.com which particular external Id field you're using. If you're not a C# fan, then the raw soap request looks like this

<soap:Body>

<create xmlns="urn:enterprise.soap.sforce.com">

<sObjects xmlns:q1="urn:sobject.enterprise.soap.sforce.com" xsi:type="q1:Case">

<q1:Account>

<q1:extId__c>00001</q1:extId__c>

</q1:Account>

<q1:Subject>test case</q1:Subject>

</sObjects>

</create>

</soap:Body>Friday, August 29, 2008

I see Joe is busy standardizing a way to bundle binary data with atom metadata, looks a lot like SwA, just switch out the soap envelope for an atom envelope. Amazon's S3 uses DIME for their SOAP API (good for them, I always liked DIME much better than SwA or MTOM), and their REST api uses HTTP extension headers to carry any metadata. Is there anything else? beside bas64, which is fine for smallish stuff fails in the face of larger binary data (the more things change the more they stay the same), should i be brushing up my mime parsing skills. ? is WebDav interop any better than it used to be?

Monday, July 14, 2008

The web services interop registration service I setup many moons ago has faithfully been chugging along, but i think its time has come to an end, i'll be taking it down sometime next week.

Monday, November 12, 2007

I found it hilarious that Don moans about HTTP auth and then points to WS-Security as the way forward, I just can't decide if Don was trying to be funny, or is actually serious. (in which case he's been in Redmond too long), looks like I'm not the only one.

Friday, May 4, 2007

Is coming up fast, May 21st in Santa Clara, CA. This is a great chance to get up to speed with Apex Code, and other parts of the Salesforce.com platform, As well as the regular ADN crew, I'll be there along with some other folks from the API team, so bring your Apex Code, API and Web Services questions, best chance to get answers straight from the horse's mouth before Dreamforce. Best of all, its Free, sign up now.

Saturday, April 28, 2007

| Scott Hanselman is again doing the Diabetes Walk, and this year is stepping up his goal to $50,000, go ahead and help him meet the goal. To the Salesforce.com folks out there, remember that they'll match your contribution, and I'm sure many other employers do as well, an easy way to make your money go twice as far. |

Thursday, April 5, 2007

Paul Fremantle has a post titled the devil is in the details, where he discussed interop issues around wrapped vs unwrapped WSDLs. Unfortunately that's totally missing the point, Why on earth should the service provider get to dictate to me what programming model i want to use? why do the tools insist on trying to divine a programming model from the WSDL, when it should be the programmers choice!, give me a switch in WSDL2Java or wsdl.exe that says generate me a wrapper or unwrapped stub.

Tuesday, February 13, 2007

As I mentioned way back in April 2003, wsdl.exe /server is basically useless. This is fixed in .NET 2.0, and wsdl.exe /serverInterface works pretty much as you'd expect (or at as I'd expect, which is good enough for me). If you're trying to use the new Outbound Messaging feature that was part of the recent Winter release, and you're still on .NET 1.1, then you'll run into this real fast. For those who haven't seen the feature, basically you specify some condition to send you a message, you define what fields you want in the message from the target object, and we generate a WSDL that represents the messages we'll send you. You feed this wsdl into your tool of choice to spit out your server skeleton, and off you go. Well, unless you're on .NET 1.1, where its not remotely obvious what to do. So, here's a quick guide to getting up and running with Outbound Messaging and .NET 1.1.

- Stop, why are you still on .NET 1.1? are you sure you can't move up to 2.0, you should seriously investigate this first.

- Ok, if you're still here, go into the Salesforce.com app, setup, workflow and setup your rule and outbound message, in the message pick the fields you want (I picked the Contact object and the firstName & lastName fields).

- Right click on the "Click for WSDL" link, and save it somewhere.

- From the command line, run wsdl.exe /server om.wsdl to generate the stub class and types file (it'll be called NotificationService.cs by default)

- You'll notice that the generated class is abstract, but don't fall for the trick of creating a concrete subclass, its not going to work.

- Horrible as it is, the easiest way forward is to now modify the generated code, remove the abstracts, and add an implementation to the notifications method, here i'll just copy the contact objects to a static list so i can have another page view the list of contacts i've received.

[System.Web.Services.WebServiceBindingAttribute(Name="NotificationBinding", Namespace="http://soap.sforce.com/2005/09/outbound")] [System.Xml.Serialization.XmlIncludeAttribute(typeof(sObject))] public class NotificationService : System.Web.Services.WebService { ///[System.Web.Services.WebMethodAttribute()] [System.Web.Services.Protocols.SoapDocumentMethodAttribute("", Use=System.Web.Services.Description.SoapBindingUse.Literal, ParameterStyle=System.Web.Services.Protocols.SoapParameterStyle.Bare)] [return: System.Xml.Serialization.XmlElementAttribute("notificationsResponse", Namespace="http://soap.sforce.com/2005/09/outbound")] public notificationsResponse notifications( [System.Xml.Serialization.XmlElementAttribute("notifications", Namespace="http://soap.sforce.com/2005/09/outbound")] notifications n) { foreach(ContactNotification cn in n.Notification) { lock(objects) { objects.Add(cn.sObject); } } notificationsResponse r = new notificationsResponse(); r.Ack = true; return r; } private static System.Collections.ArrayList objects = new System.Collections.ArrayList(); public static System.Collections.ArrayList CurrentObjects() { lock(objects) { return (System.Collections.ArrayList)objects.Clone(); } } } - Next up you need to compile this into a dll, run csc /t:library /out:bin/om.dll NotificationService.cs (this assumes you've already created the bin directory that asp.net needs). Now you can wire it up as a webservice, you can do this by editing the web.config to map a URI to the class, or just use a dummy asmx page that points the class, that's what i did, here's om.asmx

<%@ WebService class="NotificationService" %> - At this point you should be able hit hit the asmx page with a browser and get the regular .NET asmx service page.

- Next, we'll add a simple aspx page that can access the static objects collection and print out the details from the messages it got. (obviously a real implementation should do some real work in the notifications method, but this is a handy test)

<%@ Page language="C#" %> <html><head> </head><body> <h1>Recieved Contact ids</h1> <% foreach(Contact c in NotificationService.CurrentObjects()) { Response.Write(string.Format("{0} {1} {2}<br>", c.Id, Server.HtmlEncode(c.FirstName), Server.HtmlEncode(c.LastName))); } %> </body></html> - That's it, go into the app, trigger your message, and refresh the aspx page to see the data from the message we sent you.

A slightly cleaner approach is to actually do the subclass, and to copy over all the class and method attributes to the subclass as well, at least then you can easily re-run wsdl.exe if you need to (say if you changed the set of selected fields).

Saturday, January 27, 2007

The release train rolls on, I just posted an updated version of PocketHTTP to address a couple of issues, (i) you could still get bogus gzip errors because under certain conditions it would apply the crc calc out of order and (ii) there was an issue with how the connection pool managed proxied SSL connections, that would result in it giving you the wrong connection if you trying to do proxied ssl to multiple different target hosts.

Thursday, January 18, 2007

I was at Apex day in San Francisco yesterday, there was an impressive turn-out, I saw lots of new faces, along with a few familiar faces from Dreamforce. Developer's at the event were lucky enough to be able to signup for the developer prerelease of Apex Code. In addition to the support for triggers that Apex Code brings, Apex Code also supports exposing your code as a fully fledged web service, complete with WSDL, and powered by the same high performance, highly interoperable web services implementation that drives the primary salesforce.com API. In Apex code the private/public specifier has an additional option, its actually private/public/webService. Simply create your code in a package, and mark the method as webService, e.g.

package wsDemo {

webService String sayHello(String name) {

return 'Hello ' + name;

}

}webService ID createOrder(Opportunity o, OpportunityLineItem [] items) {

insert(o);

for (OpportunityLineItem i : items) {

i.opportunityId = o.Id;

}

insert(items);

return o.Id;

}Sunday, December 24, 2006

Couple of new builds out with some minor bugs fixes, first up, a new build of PocketHTTP that fixes an intermittent problem some people had seen when its reading gzip compressed responses. Second up, an update to SF3, this fixes a problem caused by a particular combination of contact details in address book on initial sync, it also addresses a problem with reading all day events from salesforce and them ending up with the wrong date in iCal. Next time you start SF3 it'll tell you about (and offer to install) the updated version, assuming you left the check for updates preference on. Right now I'm suffering from having way more idea's than time to work on them. Happy Holidays!

Friday, December 8, 2006

Over on ADN there's a tech note that explains how to add request & response compression support to .NET generated web services clients. As .NET 2.0 provides support for response compression out the box now (once you've turned it on), you'll find this code fails on .NET 2.0 because it ends up trying to decompress the response twice. So I updated the code for .NET 2.0, it now only has to handle compressing the request. The nice thing about the approach it takes (subclassing the generated proxy class), is that you don't have to change the generated code at all, so it doesn't matter how many times you do update web reference, you'll still be in compressed goodness. So, all you need is this one subclass wrapper around your generated proxy class and you're good to go.

class SforceGzip : sforce.SforceService

{

public SforceGzip()

{

this.EnableDecompression = true;

}

protected override System.Net.WebRequest GetWebRequest(Uri uri)

{

return new GzipWebRequest(base.GetWebRequest(uri));

}

}Then in your code that uses the proxy, just create an instance of this puppy instead of the regular proxy, e.g.

sforce.SforceService svc = new SforceGzip();

sforce.LoginResult lr = svc.login(args[0], args[1]);All this is included in the sample project in the download, share and enjoy, share and enjoy