Simon Fell > Its just code

Thursday, February 17, 2022

This is part 1 in a series of posts about writing an app that does fuel calculator/strategy for iRacing.

Fuel Calculator

What’s a fuel calculator anyway? Imagine a 30 lap race in a car that can only run 20 laps on a full tank of gas. At some point in the race you need to stop and get more gas. You could stop at lap 10, top up and run to the end, or stop at lap 20 and take half a tank to get you to the end. That one is easy enough to do in your head, but throw in caution laps, fuel saving from drafting, and timed races and it gets harder to keep track of. A Fuel calculator app tracks the status of the car & race from iRacing and reports information the driver can use to help determine strategy, when to stop, when to fuel save.

There are of course a number of existing apps for this with varying degress of sophisitaction but I wanted something to help specifically around multi stop races and fuel saving. In this recent TNT race at Pheonix it would of been useful to know exactly how much fuel was needed to be saved to be able to skip the last pitstop that was only a couple of laps from the end of the race. It’d also be useful to know if you’re hitting that fuel save target or if you can’t, and should stop trying. The worst outcome is you try to fuel save, costing you time and potentially positions, but don’t save enough and have to stop anyway. Which is what happened to me, and quite a few other folks as well.

Why Rust?

iRacing provides a c++ based SDK for accessing telemetry data, and some people have built .NET versions as well. I could of written it in C#, but i find the VS2019 winforms tooling super annoying to use. I was going to use go, but then remembered that Windows Defender seems to consider anything built with go as having a virus in it. This is apparently beyond the ability of Microsoft and Google to fix. But I’ve been learning rust over the last year or so, so thought it’d be an interesting exercise to write it in Rust. The iRacing SDK is based around memory mapped data and a bunch of c structs that include a description of the data as well as the actual telemetry data. Given that there’s going to be a lot of unsafe Rust code to deal with that it’ll be interesting to see how much Rust helps vs gets in the way.

Parts

I broke the problem up into 3 main areas

- Getting data out of and into iRacing.

- Collecting the relevant data from iRacing to construct the current state of the race, as well as calculating additional needed stats like average fuel usage per lap.

- Calculating strategy options given the current state of the race.

We’ll start by looking at the strategy options, and cover the other areas in later posts.

Fuel Strategy

We need various peices of information to be able to generate a guestimate of the later laps. If you want to see the whole code for this part, see strat.rs

- The fuel used and time taken for a typical lap.

- The fuel used and time taken for a lap under yellow flag conditions.

- How much fuel is currently in the car.

- What’s the maximum amount of fuel the car can hold.

- How the race ends. (laps, time, both)

- If we’re currently under yellow, and if so, how many more yellow laps are expected.

A struct to hold this is easy enough, getting some of this data not so much. To know when you can pit, you need to know when the race will end. Races can be setup in different ways, a fixed number of laps, a fixed amount of time, or a combo laps & time, where the race ends when the first of either is reached. My first instinct was to capute this with a pair of Option fields, e.g.

pub struct EndsWith {

laps: Option<usize>,

time: Option<Duration>,

}

The one downside to this is that this struct can be constructed in an invalid state, e.g. EndsWith{None,None}. Ideally

we’d use the type system to not let this happen at all, compile time checks are better than runtime checks. We can use

enum’s for this, enums in Rust are much more powerful than in other c’ish languages. In my Rust journey so far, I find

that enums are often the answer.

pub enum EndsWith {

Laps(usize),

Time(Duration),

LapsOrTime(usize, Duration),

}

Now you can’t construct an EndsWith that doesn’t specify one of the valid options.

So here’s the struct that defines a strategy calculation request.

pub struct Rate {

pub fuel: f32,

pub time: Duration,

}

pub struct StratRequest {

pub fuel_left: f32,

pub tank_size: f32,

pub yellow_togo: usize,

pub ends: EndsWith,

pub green: Rate,

pub yellow: Rate,

}

First up we need to work out how many laps we can do with the current fuel, then fill up and repeat until the end of the race. We also need to deal with being under yellow flag conditions, where much less fuel is used, and the laps take much longer. So the first few laps we apply might be yellow flag laps before the race goes back to racing. Or there’s the edge case where the race will finish under the yellow flag. I had a few goes at this, the simple case where there’s no yellows and a known number of laps is pretty simple (divide fuel by rate to get number of laps). But then dealing with timed races is a challenge, you’d have to calculate the expected number of laps first. And then dealing with yellows makes it even messier. I finally settled on an approach that just walks forward one lap at a time applying the relevant rate. This can also accumulate time to determine when the end is.

I built an Iterator chain that provides the stream of future laps, this can then be iterated over and broken up into stints. I like how the code ended up, it seems much tidier to me than the previous attempts that had various loops and a bunch of variables tracking different states.

let yellow = iter::repeat(self.yellow).take(self.yellow_togo);

let mut tm = Duration::ZERO;

let mut laps = 0;

let laps = yellow.chain(iter::repeat(self.green)).take_while(|lap| {

tm = tm.add(lap.time);

laps += 1;

match self.ends {

EndsWith::Laps(l) => laps <= l,

EndsWith::Time(d) => tm <= d,

EndsWith::LapsOrTime(l, d) => laps <= l && tm <= d,

}

});

Now we can iterate over laps accumulating time & fuel and determine where each run between pitstops occurs (called stints)

let mut stints = Vec::with_capacity(4);

let mut f = self.fuel_left;

let mut stint = Stint::new();

for lap in laps {

if f < lap.fuel {

stints.push(stint);

stint = Stint::new();

f = self.tank_size;

}

stint.add(&lap);

f -= lap.fuel;

}

if stint.laps > 0 {

stints.push(stint);

}

There’s probably a way to write that as part of the iterator chain. I find that thinking about it in this 2 peices easier to think about though.

From here its straight forward enough to create a Pitstop at the end of each stint (except the last one). If the last stint doesn’t require a full fuel load, then the “spare” capacity can be used to pull a pitstop forward. This creates the Pitstop windows, the earlest and latest that you can make the pitstop. For the 30 lap race, 20 lap tank example at the start, this would generate a Pitstop window that begins at lap 10 and ends at lap 20.

Now we have enough information to answer the fuel save question. If I can run laps that use less fuel, can I get rid of a pitstop? If you want to skip the last pitstop, then you’d need to have saved enough fuel to complete the last stint. We know what that is from when we built the Stints. We can include that in our results. Later on we’ll take that info to compute a fuel usage target we can display.

As all the input needed to generate this strategy result is managed externally, it easy to write unit tests for this.

#[test]

fn strat_two_stops() {

let d = Duration::new(40, 0);

let r = StratRequest {

fuel_left: 9.3,

tank_size: 10.0,

max_fuel_save: 0.0,

yellow_togo: 0,

ends: EndsWith::Laps(49),

green: Rate { fuel: 0.5, time: d },

yellow: Rate { fuel: 0.1, time: d },

};

let s = r.compute();

assert_eq!(vec![18, 20, 11], s.laps());

assert_eq!(vec![Pitstop::new(9, 18), Pitstop::new(29, 38)], s.stops);

}

Next time we’ll cover the calculator, this tracks the state of the race, the fuel usage on prior laps to feed into the strategy builder.

Monday, July 5, 2021

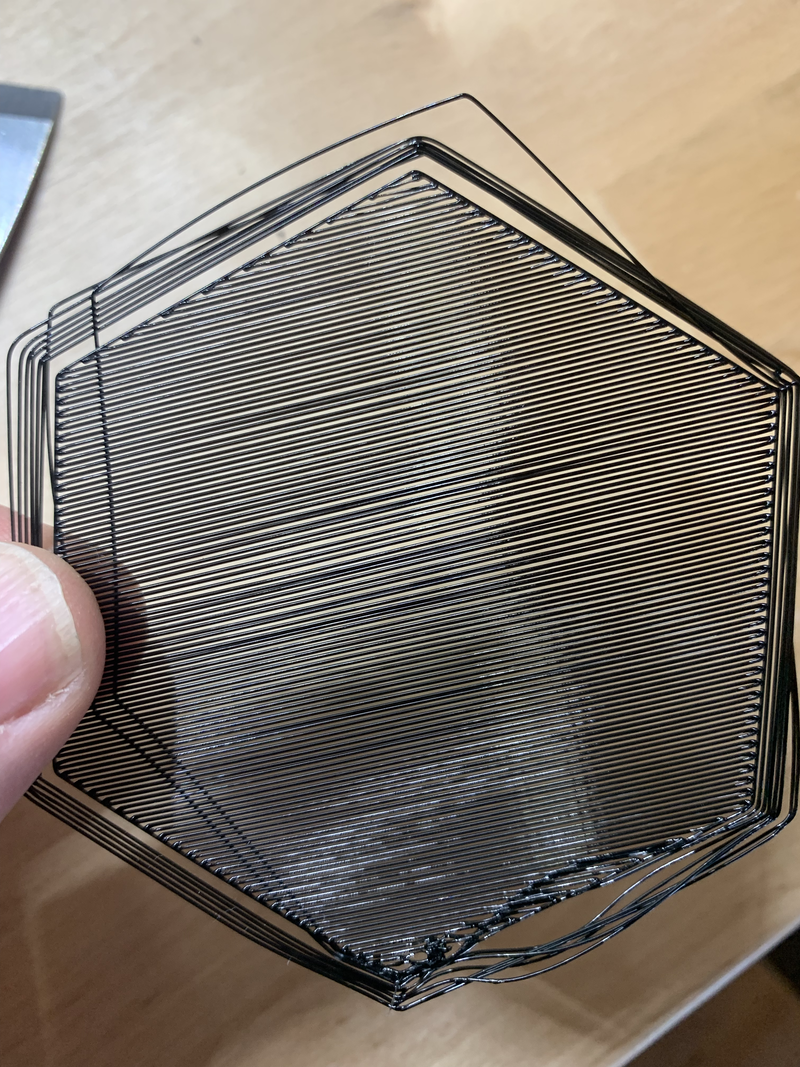

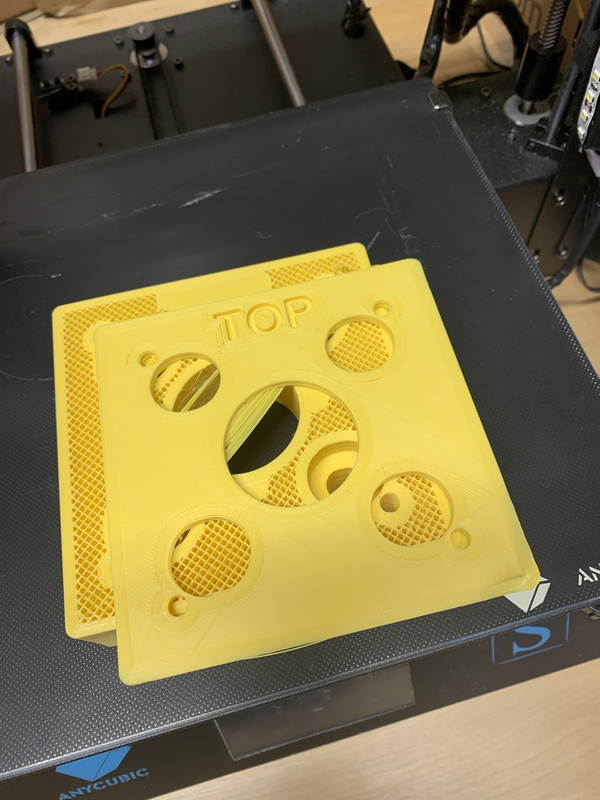

The last post ended with me fighting with prints curling and eventually coming off the bed. I decided to grab a single layer test print and see what the first layer was doing.

Wow that's a terrible first layer, no wonder I've been having issues. It looks like its not close enough to the bed. I'd recently installed a BLTouch, so next step is to do a print without the bed leveling mesh enabled.

That one turned out better, the lines are stuck to each other and the bed. Part of the BLTouch install involves determining the distance between the nozzle and the activation point of the probe. Something must be off there. I carefully go through the process to determine the probe and nozzle offset and then have it measure the bed levelling mesh again. Now a print with the mesh applied.

That one turned out much better, even thickness, good bed adhesion. Time to kick off the 15 hour bearing mount print. Success, it stays stuck to the bed, time to assemble that last actuator.

3 first layer prints. From top to bottom, initial print, no auto bed levelling,

auto bed levelling with recalibrated probe to nozzle setting.

Wednesday, June 9, 2021

I've been working on an SFX100 build. Yeah i know its been a long time, you thought it was long finished. Not so much.

Back in October I'd started on the 3D printing. I got a single set of prints completed, and went through the process to assemble the first actuator. This went reasonably well. The instructions are pretty good, I used a dab of superglue to hold the o-ring onto the bump stop and discovered that you can't get the grease gun on once the slider is fitted to the ball screw. Easy enough to unscrew the slider and then grease the ball-screw. With this success I was in full on 3D printing mode for a couple of weeks to print parts for the remaining 3 actuators. A couple of weeks later, and I'm assembly the 3 remaining actuators. That's when I started running into problems.

First off, I noticed that the couplers were different sizes, I'd gotten 2 the right size, and 2 that were smaller.

.

.

The folks over at ntl-bearing.com quickly sent me 2 replacements.

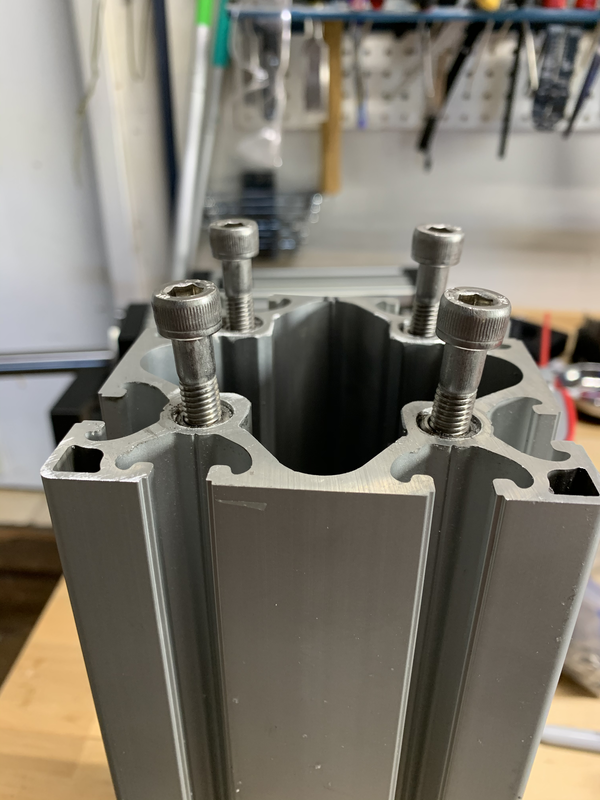

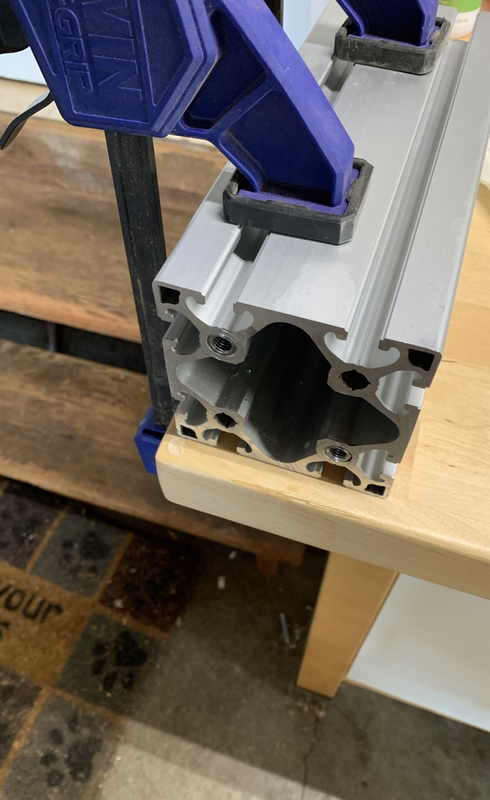

Then i hit bigger problems. Remember back when I said it was important to get the profile inserts in square, this is why.

You can see here that the left side bolts aren't square to the profile, especially

the front left. The top bearing mount design has standard clearance holes for the bolt, but is deep enough that with the

angled bolt you can't get all the bolts through the bearing mount and into the profile. One profile I couldn't get the

bearing mount on at all. The second one I managed to get on, but it got pulled off center and the actuator travel was

really stiff and noisy. You can't get the inserts out of the profile, so its either buy new profile and redo these 2, or

redesign the bearing mount to allow the bolts a bit more room. This only reenforces my earlier recommendation to build the

Item24 variation and have Item24 tap the profile for you.

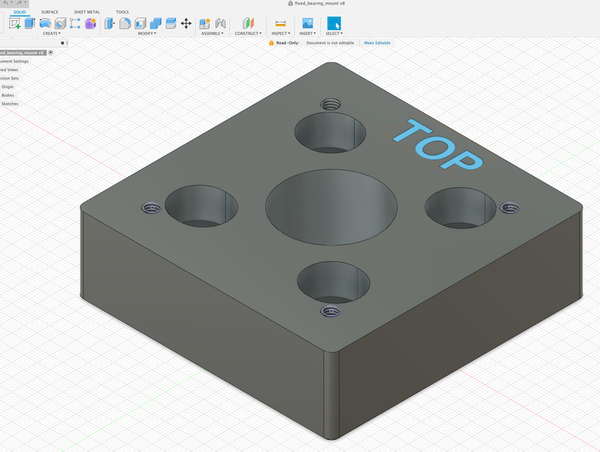

I set about tweaking the bearing mount design. Fusion360 wouldn't import the STL file due to the large number of triangles

in it as a result of their being threaded holes in the model. And only STL files are published for the 3d printed parts.

So i set about recreating it from scratch in Fusion 360. This wasn't too bad, its a rectangle with some holes in it. Most

of the time was spent on checking the dimensions of the hole for the bearing to sit in. I then updated the model to add

a small slot to the bolt holes.

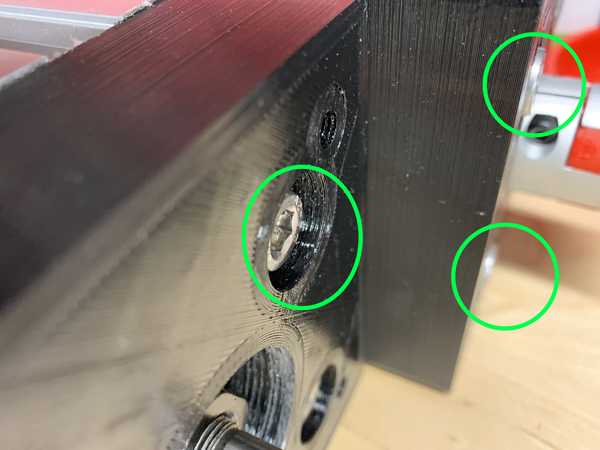

I printed a draft and test fit it and everything seemed okay. Went back and did final prints of 2 more. A few days

later back to assembly. Now, when i did the test fit, i was concentrating on the bearing placement, getting the bolts in

and tightened up and checking that the screw-ball was still square and travelled okay. What I didn't check was the motor

mount that fits on top of the bearing mount. You can see here that I screwed up the depth of the bolt recess and the top

of the bolts protrude slightly above the top of the bearing mount. (left good, right bad)

.

.

Bah. back to Fusion 360, tweak the design, print some more.

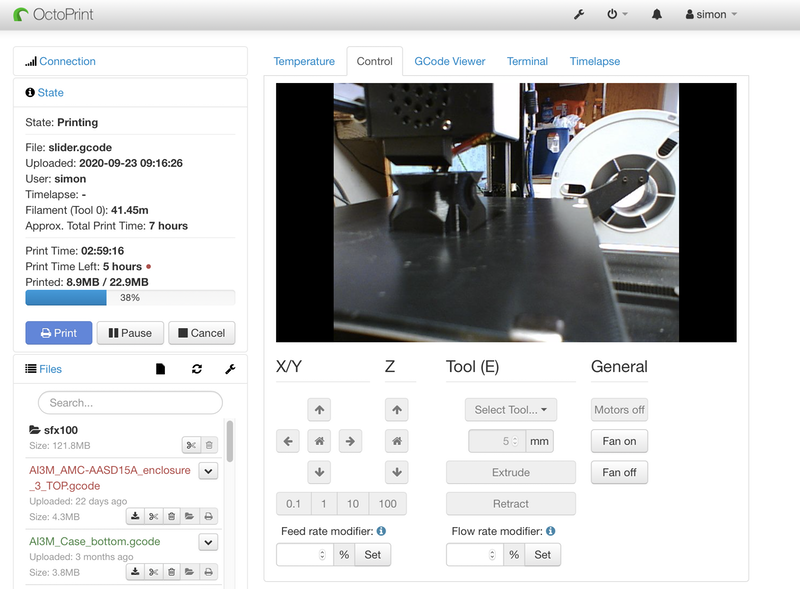

We're now well into December, and it is much colder (relatively, it is California after all) in the garage where the 3D printer lives than it was before. I tried printing the 2 bearing mounts again, and had all sorts of issues. First layer wouldn't stick, and very poor adhesion between layers. After a number of attempts and some research I found some discussions of how the ambient temperature can affect prints. I experimented with different hot-end temperatures and was able to get some better prints, I ended up printing at 220 vs the original temperature of 200. At this point I was tired of fighting the printer on 15 hour prints, and turned to a couple of other projects for a while.

After having enough successful smaller prints, I finally got around to trying to print the bearing mounts again. I kicked off the

print, it seemed to be going well. Checked in after 13 hours, still going strong. Come back when it should of finished to find

this.

I was (and still am) baffled by this, it looks like it just shifted the X and Y

by 100mm at a particular layer. This doesn't seem to be the regular layer shift problems, which are typically mechanical and accumulate

small amounts of drift over many layers. The fact that both X and Y are offset the same amount also implies its not a mechanical issue.

Possibly a bug in the slicer? Possibly something caused Octoprint to miss some steps from the g-code? I still don't know. Without

knowing why it failed I was loathed to try it again. I put it down again, and went and worked on some other projects.

More time passes, I don't see the issue again. I have a 12 hour print go fine. I finally get around to trying the bearing mount again.

That one comes out fine, some slight curling at the corners, but that's to be expected and not a problem. I assemble and test actuator #3,

success!. I kick off the final print, and a few hours later am greeted by this.

.

.

As far

as i can tell, the corners curled enough to unstick the print from the bed, and chaos ensued. More research, I find some recommendations

to start with the part cooling fan off and have it ramp up over the initial layers. I fiddle with slicer settings some more, and kick

off another print. Its running as a type this. It looks okay so far, there's some curling but its less than it used to be. Hopefully

this one completes successfully.

Update: It didn't

Saturday, January 30, 2021

We're continuing with the array search covered in episode 1. I'd recommend reading that before this one.

Lets start with an easy one. One implementation we didn't cover in episode 1 is to use bytes.IndexByte. IndexByte does end up calling a hand craft assembly version so there's some hope this might be decent.

func (n *nodeLoop) getIndexByte(k byte) interface{} {

idx := bytes.IndexByte(n.key[:n.count], k)

if idx < 0 {

return nil

}

return n.val[idx]

}Benchmark_IndexByte-8 11620299 102 ns/op

Benchmark_Loop-8 11181962 102 ns/op

Benchmark_LoopOneBoundsCheck-8 13485289 87.1 ns/op

Benchmark_LoopRev-8 10736464 111 ns/op

Benchmark_LoopRevOneBoundsCheck-8 14661828 81.0 ns/op

Benchmark_BinarySearch-8 4895956 246 ns/op

Benchmark_BinarySearchOneBoundsCheck-8 4806734 249 ns/op

Benchmark_BinarySearchInlined-8 10140198 116 ns/op

Benchmark_BinarySearchInlinedOneBoundsCheck-8 11359298 106 ns/op

PASSThat's disappointing. I generated a cpu profile, and it seems like the overhead of having deal with an arbitrary sized slice out weigh's any gains from the more efficient comparison eventually done. A variation that always passes 16 bytes to bytes.IndexByte and checks the result against n.count performs almost the same. We'll skip the steps for profiling and disassembly, checkout episode 1 if you need the details on how to do that.

Loop Unrolling is a common optimization. From the assembly we looked at previously we know the compiler isn't doing this. We can manually write a loop unrolled version. Lets see how this does.

func (n *nodeLoop) getUnrolledLoop(k byte) interface{} {

switch n.count {

case 16:

if n.key[15] == k {

return n.val[15]

}

fallthrough

case 15:

if n.key[14] == k {

return n.val[14]

}

fallthrough

case 14:

if n.key[13] == k {

return n.val[13]

}

fallthrough

case 13:

if n.key[12] == k {

return n.val[12]

}

fallthrough

...Benchmark_IndexByte-8 11525317 104 ns/op

Benchmark_UnrolledLoop-8 13063185 93.0 ns/op

Benchmark_Loop-8 11788951 101 ns/op

Benchmark_LoopOneBoundsCheck-8 13612688 87.5 ns/op

Benchmark_LoopRev-8 10756114 112 ns/op

Benchmark_LoopRevOneBoundsCheck-8 14641873 82.3 ns/op

Benchmark_BinarySearch-8 4833198 248 ns/op

Benchmark_BinarySearchOneBoundsCheck-8 4789767 250 ns/op

Benchmark_BinarySearchInlined-8 10150819 118 ns/op

Benchmark_BinarySearchInlinedOneBoundsCheck-8 11339012 107 ns/opThere's still a lot of branches in the unrolled version, so not totally surprised with the outcome. Reviewing the assembly for this one, go doesn't use jump tables for the switch statement. This means its doing more comparisons than expected as well.

One thing that stands out from the loops versions assembly is that we have a 64 bit CPU which we're then forcing to do things one byte at time. Can we work 64 bits at a time instead and not have to resort to hand crafted assembly? What if we pack 8 keys into a uint64 and do bitwise operations to compare against the target key? Worst case is we'd have to do this on 2 uint64's instead of 16 bytes. Its doable, but at a cost of being significantly harder to understand than the loop version.

Starting with put we need to work out which uint64 to update, and then shift the key value into the relevant byte of that uint64.

type nodeMasks struct {

keys1 uint64

keys2 uint64

vals [16]interface{}

count int

}

func (n *nodeMasks) put(k byte, v interface{}) {

m := &n.keys1

c := n.count

if n.count >= 8 {

m = &n.keys2

c = c - 8

}

*m = *m | (uint64(k) << (c * 8))

n.vals[n.count] = v

n.count++

}get is more work. First off we need a uint64 that has the value k in each byte. This can be constructed with a bunch of shifts, but turns out to be a significant percentage of the overall work. There are only 256 possible values for this though, so we can calculate them once at startup, and grab that when needed. The lookup table of masks takes 2k bytes (256 * 8) a more than reasonable tradeoff.

func (n *nodeMasks) get(k byte) interface{} {

if n.count == 0 {

return nil

}

// mask is a uint64 with k at each byte position

mask := masks[k]

// act has bytes with value FF in positions we don't want to consider

act := active[n.count-1]

// XOR the mask and the keys, for any bytes that match the result will be 0

// for ones that don't match the result will be not 0.

// OR the result with act so that any key positions we shouldn't consider get

// set to FF

r := (mask ^ n.keys1) | act

// now check each byte in the result for a zero

if (r & b1) == 0 {

return n.vals[0]

}

if (r & b2) == 0 {

return n.vals[1]

}

if (r & b3) == 0 {

return n.vals[2]

}

if (r & b4) == 0 {

return n.vals[3]

}

if (r & b5) == 0 {

return n.vals[4]

}

if (r & b6) == 0 {

return n.vals[5]

}

if (r & b7) == 0 {

return n.vals[6]

}

if (r & b8) == 0 {

return n.vals[7]

}

if n.count < 9 {

return nil

}

// same again for the upper 8 keys

r = (mask ^ n.keys2) | active[n.count-9]

if (r & b1) == 0 {

return n.vals[8]

}

if (r & b2) == 0 {

return n.vals[9]

}

if (r & b3) == 0 {

return n.vals[10]

}

if (r & b4) == 0 {

return n.vals[11]

}

if (r & b5) == 0 {

return n.vals[12]

}

if (r & b6) == 0 {

return n.vals[13]

}

if (r & b7) == 0 {

return n.vals[14]

}

if (r & b8) == 0 {

return n.vals[15]

}

return nil

}Once we've finished with the bit operations, we're left with a uint64. Where the key matches the target value, the byte in the uint64 will be zero otherwise its none zero. We could write a loop to then check each byte, the above code is the unrolled equivalent of that loop, and provides a decent gain over the loop, 70.7ns vs 103ns.

Benchmark_IndexByte-8 11373612 104 ns/op

Benchmark_UnrolledLoop-8 12968982 92.5 ns/op

Benchmark_Masks-8 16908472 70.7 ns/op

Benchmark_MasksWithFinalLoop-8 11663716 103 ns/op

Benchmark_Loop-8 11187504 105 ns/op

Benchmark_LoopOneBoundsCheck-8 13619496 88.3 ns/op

Benchmark_LoopRev-8 10784320 112 ns/op

Benchmark_LoopRevOneBoundsCheck-8 14605256 82.5 ns/op

Benchmark_BinarySearch-8 4791508 254 ns/op

Benchmark_BinarySearchOneBoundsCheck-8 4614759 252 ns/op

Benchmark_BinarySearchInlined-8 10562584 114 ns/op

Benchmark_BinarySearchInlinedOneBoundsCheck-8 11154210 107 ns/opBut we're back to byte at a time, can we do more bit twiddling to decode the index out of the uin64? Turns out we can. With some additional bit fiddling, we can get the result to have just the high bit of the byte set where there was a match, and zero otherwise. At that point we can count the number of trailing zeros to determine which high bit was set.

func (n *nodeMasks) getMoreBitTwiddling(k byte) interface{} {

if n.count == 0 {

return nil

}

// This follows the same approach as get. But uses additional

// bit twiddling to determine if any of the bytes are zero.

mask := masks[k]

r := (mask ^ n.keys1) | active[n.count-1]

// see https://graphics.stanford.edu/~seander/bithacks.html#ZeroInWord

x := (r - 0x0101010101010101) & ^(r) & 0x8080808080808080

idx := bits.TrailingZeros64(x) / 8

if idx < 8 {

return n.vals[idx]

}

if n.count < 9 {

return nil

}

r = (mask ^ n.keys2) | active[n.count-9]

x = (r - 0x0101010101010101) & ^(r) & 0x8080808080808080

idx = bits.TrailingZeros64(x) / 8

if idx < 8 {

return n.vals[idx+8]

}

return nil

}As an aside, despite the fact that bits.TrailingZeros64 appears to be a pure go implementation, the compiler will replace it with the relevant assembly instruction.

Benchmark_IndexByte-8 10000000 103 ns/op

Benchmark_UnrolledLoop-8 10000000 92.9 ns/op

Benchmark_Masks-8 10000000 71.0 ns/op

Benchmark_MasksWithFinalLoop-8 10000000 105 ns/op

Benchmark_MasksWithBitTwiddling-8 10000000 78.1 ns/op

Benchmark_Loop-8 10000000 105 ns/op

Benchmark_LoopOneBoundsCheck-8 10000000 88.2 ns/op

Benchmark_LoopRev-8 10000000 113 ns/op

Benchmark_LoopRevOneBoundsCheck-8 10000000 82.2 ns/op

Benchmark_BinarySearch-8 10000000 250 ns/op

Benchmark_BinarySearchOneBoundsCheck-8 10000000 254 ns/op

Benchmark_BinarySearchInlined-8 10000000 115 ns/op

Benchmark_BinarySearchInlinedOneBoundsCheck-8 10000000 107 ns/opUnexpectedly the bit twiddling approach is slightly slower than the version that used an unrolled loop to decode the return index. Wasn't expecting that, perhaps as all the checks for zero byte don't depend on each other, they can all get speculatively executed, while the bit twiddling approach depends on each previous instruction. Concrete explanations for this welcome. Notice though that either approach is faster than anything else so far.

Our final version is the one described in the art paper, which uses SIMD instructions to compare all 16 keys at once. There's no SIMD intrinsics for go, so this'll have to be in straight up assembly. I used avo to help deal with some of the drudgery involved. (see asm/asm.go in the repo for the full code). XMM registers are 16 bytes wide, and the VPBROADCASTB & PCMPEQB instructions are the primary SIMD instructions involved.

TEXT("Lookup", NOSPLIT, "func(k byte, x *[16]byte) int32")

Pragma("noescape")

Doc("Lookup returns the index into the array 'x' of the value 'k', or -1 if its not there." +

" If k appears at multiple locations, you'll get one of them as the return value, it may not be the first one.")

x := Load(Param("x"), GP64())

k := Load(Param("k"), GP32())

xKey := XMM()

MOVD(k, xKey)

VPBROADCASTB(xKey, xKey) // xmm register now contains the value k in all 16 bytes

xArr := XMM()

MOVUPD(Mem{Base: x}, xArr) // xmm register now contains the 16 bytes of the array x

// Compare bytes for equality between the 2 xmm registers.

// xArr is updated with the result. Where they're equal the byte is set to FF

// otherwise its set to 0

PCMPEQB(xKey, xArr)

rv := GP64()

rOffset := GP64()

XORQ(rOffset, rOffset) // resOffset = 0

MOVQ(xArr, rv) // get the lower 8 bytes from the xmm register into rv

TESTQ(rv, rv) // is rv 0? if not, at least one byte was equal

JNZ(LabelRef("returnCount")) // jump to converting that back to a index

MOVHLPS(xArr, xArr) // move top 64 bits to lower 64 bits in xmm register

MOVQ(xArr, rv) // move lower 8 bytes into rv

TESTQ(rv, rv)

JZ(LabelRef("notFound")) // is rv 0? if so there's no matches, so return -1

// the match was found in the top 8 bytes, so we need

// to offset the final calculated index by 8.

MOVQ(U64(8), rOffset)

Label("returnCount") // return tailing zeros / 8 + offset

idx := GP64()

TZCNTQ(rv, idx) // set idx to the number of trailing zeros in rv

SHRQ(Imm(3), idx) // divide idx by 8 to get from bit position to byte posn.

ADDQ(rOffset, idx) // add the result offset in.

Store(idx.As32(), ReturnIndex(0)) // return the final index as the result.

RET()

Label("notFound")

rMiss := GP32()

MOVL(U32(0xFFFFFFFF), rMiss)

Store(rMiss, ReturnIndex(0)) // return -1

RET()The VPBROADCASTB and PCMPEQB instructions do the heavy lifting, but otherwise it bares a strongly resemblance to the bit mask approach we wrote earlier.

Benchmark_IndexByte-8 11691013 103 ns/op

Benchmark_UnrolledLoop-8 12977037 91.9 ns/op

Benchmark_GetLookupAsm-8 23960334 48.9 ns/op

Benchmark_Masks-8 16845604 70.4 ns/op

Benchmark_MasksWithFinalLoop-8 11721877 102 ns/op

Benchmark_MasksWithBitTwiddling-8 15233120 77.3 ns/op

Benchmark_Loop-8 11848963 101 ns/op

Benchmark_LoopOneBoundsCheck-8 13606868 87.6 ns/op

Benchmark_LoopRev-8 10635825 113 ns/op

Benchmark_LoopRevOneBoundsCheck-8 13355061 88.9 ns/op

Benchmark_BinarySearch-8 4826577 253 ns/op

Benchmark_BinarySearchOneBoundsCheck-8 4798706 250 ns/op

Benchmark_BinarySearchInlined-8 10516956 114 ns/op

Benchmark_BinarySearchInlinedOneBoundsCheck-8 11263238 107 ns/opA clear winner, that's half the time our original loop took, and 30% faster than the next best version.

But which one would i really use? Despite the giant trip down the performance rabbit hole, I'd pick the one that performs the best out of the ones that are easily understandable. That's be the reverse loop with the tweak to remove all but one of the bounds checks, LoopRevOneBoundsCheck. If it later turned out that this particular part of the system was a bottleneck that needed improving then i'd probably go with the assembly version. However I'd need to do a lot more studying first to validate its correctness, not its logical correctness, but its correctness as a assembly function inside a go execution environment.

As a final exercise, I'll leave you to work out at what size does binary search actually win? Let me know what results you get.

Remember how i said it might depend on OS, CPU? Here's some runs from other machines, all using go 1.15.7

Windows 10 with an Intel 9900KS. Faster than the iMac runs, not surprising given its a 3 generations newer processor. But the relative performance between the approaches is pretty similar.

goos: windows

goarch: amd64

pkg: github.com/superfell/loopVsBinarySearch

Benchmark_IndexByte-16 14651924 81.8 ns/op

Benchmark_UnrolledLoop-16 16365361 72.8 ns/op

Benchmark_GetLookupAsm-16 31375419 37.9 ns/op

Benchmark_Masks-16 21862701 55.0 ns/op

Benchmark_MasksWithFinalLoop-16 14606589 81.3 ns/op

Benchmark_MasksWithBitTwiddling-16 18442527 63.3 ns/op

Benchmark_Loop-16 15587856 77.0 ns/op

Benchmark_LoopOneBoundsCheck-16 17430231 68.1 ns/op

Benchmark_LoopRev-16 14259435 85.0 ns/op

Benchmark_LoopRevOneBoundsCheck-16 19040486 64.0 ns/op

Benchmark_BinarySearch-16 6129547 195 ns/op

Benchmark_BinarySearchOneBoundsCheck-16 6129829 196 ns/op

Benchmark_BinarySearchInlined-16 13593127 86.6 ns/op

Benchmark_BinarySearchInlinedOneBoundsCheck-16 14756770 80.6 ns/op

PASSAnd finally ARM/linux running on a Raspberry Pi 4 (this doesn't include a version of the SIMD solution). Different relative performance between the approaches now. The UnrolledLoop does better than any others, and the inlined binary search is not far behind it.

goos: linux

goarch: arm

pkg: github.com/superfell/loopVsBinarySearch

Benchmark_IndexByte-4 1443998 830 ns/op

Benchmark_UnrolledLoop-4 2522744 477 ns/op

Benchmark_Masks-4 2023891 594 ns/op

Benchmark_MasksWithFinalLoop-4 1422764 857 ns/op

Benchmark_MasksWithBitTwiddling-4 1911872 629 ns/op

Benchmark_Loop-4 1708760 700 ns/op

Benchmark_LoopOneBoundsCheck-4 1619178 679 ns/op

Benchmark_LoopRev-4 1728384 737 ns/op

Benchmark_LoopRevOneBoundsCheck-4 1907144 610 ns/op

Benchmark_BinarySearch-4 1000000 1309 ns/op

Benchmark_BinarySearchOneBoundsCheck-4 990722 1219 ns/op

Benchmark_BinarySearchInlined-4 2160622 556 ns/op

Benchmark_BinarySearchInlinedOneBoundsCheck-4 2185908 549 ns/opTuesday, January 26, 2021

The answer to almost every software engineering question is "It Depends". In this post we explore options for finding an item in a small array. Grab a cup of tea and down the rabbit hole we go.

I've been working on art a golang implementation of an adaptive radix tree. In this the tree can use a few differently sized nodes, and move between them to optimize memory usage. One of these node sizes is node16, a node that can store upto 16 child items. Each item is identified by a single byte key. The common read path is given a key tell me the child item or tell me its not there.

My first implementation stored the keys & values in no particular order and scanned them for lookups. At this time in the project I was more interested in something that worked than something that was the most optimal. Here's a simplified version of the code. Note that put makes many simplifying assumptions that aren't relevant to the discussion at hand. You can get all the code from this article from the loopVsBinarySearch repo.

type nodeLoop struct {

key [16]byte

val [16]interface{}

count int

}

func (n *nodeLoop) put(k byte, v interface{}) {

idx := n.count

n.key[idx] = k

n.val[idx] = v

n.count++

}

func (n *nodeLoop) get(k byte) interface{} {

for i := 0; i < n.count; i++ {

if n.key[i] == k {

return n.val[i]

}

}

return nil

}Should i use a binary search instead? It depends. Binary Search would do better for larger arrays, but is it still better for our little 16 byte array? The loop has o(n) time while the binary search is o(log n). In my experience though Big O estimates of algorithmic time can be very miss-leading for small values of n. Lets find out, go has a binary search function in the sort package, which makes this straightforward.

type nodeSorted struct {

key [16]byte

val [16]interface{}

count int

}

func (n *nodeSorted) put(k byte, v interface{}) {

idx := sort.Search(n.count, func(i int) bool {

return n.key[i] >= k

})

copy(n.key[idx+1:], n.key[idx:int(n.count)])

copy(n.val[idx+1:], n.val[idx:int(n.count)])

n.key[idx] = k

n.val[idx] = v

n.count++

}

func (n *nodeSorted) get(k byte) interface{} {

idx := sort.Search(n.count, func(i int) bool {

return n.key[i] >= k

})

if idx < int(n.count) && n.key[idx] == k {

return n.val[idx]

}

return nil

}And lets write a benchmark to compare the 2

var rnd = rand.New(rand.NewSource(42))

var keys = make([]byte, 16)

func init() {

for i := 0; i < len(keys); i++ {

keys[i] = byte(i)

}

rnd.Shuffle(len(keys), func(i, j int) {

keys[i], keys[j] = keys[j], keys[i]

})

}

func Benchmark_Loop(b *testing.B) {

n := new(nodeLoop)

for _, k := range keys {

n.put(k, int(k))

}

b.ResetTimer()

for i := 0; i < b.N; i++ {

for _, k := range keys {

v := n.get(k)

if v != int(k) {

b.Errorf("Got unexpected value of %d for key %d", v, k)

}

}

}

}

func Benchmark_BinarySearch(b *testing.B) {

n := new(nodeSorted)

for _, k := range keys {

n.put(k, int(k))

}

b.ResetTimer()

for i := 0; i < b.N; i++ {

for _, k := range keys {

v := n.get(k)

if v != int(k) {

b.Errorf("Got unexpected value of %d for key %d", v, k)

}

}

}

}Lets run these and see what we get. All these tests were done using go 1.15.7 on OSX 10.15.7 running on an iMac with an Intel i7-6700K CPU @ 4.00GHz. You may get different results with different combinations of OS, go version & processor, it depends.

$ go test -bench .

goos: darwin

goarch: amd64

pkg: github.com/superfell/loopVsBinarySearch

Benchmark_Loop-8 11602429 104 ns/op

Benchmark_BinarySearch-8 4848440 247 ns/op

PASSThe loop version doesn't look so shabby anymore, it runs in ~40% of the time of the binary search. (side note for VSCode users, the go plugin defaults to running code coverage on running benchmarks, which can change the relative output between benchmarks quite a bit. Either turn this off, or run the from the command line). What's going on? fortunately go has some great tools integrated into the tool chain, lets grab a cpu profile and look at that. We'll use the `-benchtime=10000000x` option to have each benchmark run the same number of times rather than the same duration. This will make comparing times between the different implementations easier.

$ go test -bench . -benchtime=10000000x -cpuprofile=cpu.prof

goos: darwin

goarch: amd64

pkg: github.com/superfell/loopVsBinarySearch

Benchmark_Loop-8 10000000 104 ns/op

Benchmark_BinarySearch-8 10000000 253 ns/op

PASSWe can use pprof to examine the generated cpu profile and generate timing annotated versions of the source code. We use the pprof command `list get` to show the functions called get.

$ go tool pprof cpu.prof

Type: cpu

Time: Jan 26, 2021 at 11:05am (PST)

Duration: 3.76s, Total samples = 3.13s (83.32%)

Entering interactive mode (type "help" for commands, "o" for options)

(pprof)type list get at the (pprof) prompt.

(pprof) list get

Total: 3.13s

ROUTINE ============= github.com/superfell/loopVsBinarySearch.(*nodeLoop).get in /Users/simon/Github/loopVsBinarySearch/n.go

480ms 560ms (flat, cum) 17.89% of Total

. . 15: n.key[idx] = k

. . 16: n.val[idx] = v

. . 17: n.count++

. . 18:}

. . 19:

20ms 40ms 20:func (n *nodeLoop) get(k byte) interface{} {

110ms 170ms 21: for i := 0; i < n.count; i++ {

260ms 260ms 22: if n.key[i] == k {

90ms 90ms 23: return n.val[i]

. . 24: }

. . 25: }

. . 26: return nil

. . 27:}

. . 28:

ROUTINE ============= github.com/superfell/loopVsBinarySearch.(*nodeSorted).get in /Users/simon/Github/loopVsBinarySearch/n.go

290ms 1.93s (flat, cum) 61.66% of Total

. . 157: n.key[idx] = k

. . 158: n.val[idx] = v

. . 159: n.count++

. . 160:}

. . 161:

50ms 50ms 162:func (n *nodeSorted) get(k byte) interface{} {

110ms 1.75s 163: idx := sort.Search(n.count, func(i int) bool {

. . 164: return n.key[i] >= k

. . 165: })

70ms 70ms 166: if idx < int(n.count) && n.key[idx] == k {

60ms 60ms 167: return n.val[idx]

. . 168: }

. . 169: return nil

. . 170:}

. . 171:

ROUTINE ============= github.com/superfell/loopVsBinarySearch.(*nodeSorted).get.func1 in /Users/simon/Github/loopVsBinarySearch/n.go

680ms 680ms (flat, cum) 21.73% of Total

. . 158: n.val[idx] = v

. . 159: n.count++

. . 160:}

. . 161:

. . 162:func (n *nodeSorted) get(k byte) interface{} {

260ms 260ms 163: idx := sort.Search(n.count, func(i int) bool {

420ms 420ms 164: return n.key[i] >= k

. . 165: })

. . 166: if idx < int(n.count) && n.key[idx] == k {

. . 167: return n.val[idx]

. . 168: }

. . 169: return nilHmmmm, that call to Search is taking a lot of time, lets see what that's doing. (pprof) list Search

ROUTINE ======================== sort.Search in /usr/local/go/src/sort/search.go

950ms 1.64s (flat, cum) 52.40% of Total

. . 54:// return s != "" && s[0] == 'y'

. . 55:// })

. . 56:// fmt.Printf("Your number is %d.\n", answer)

. . 57:// }

. . 58://

50ms 50ms 59:func Search(n int, f func(int) bool) int {

. . 60: // Define f(-1) == false and f(n) == true.

. . 61: // Invariant: f(i-1) == false, f(j) == true.

. . 62: i, j := 0, n

290ms 290ms 63: for i < j {

110ms 110ms 64: h := int(uint(i+j) >> 1) // avoid overflow when computing h

. . 65: // i ≤ h < j

430ms 1.12s 66: if !f(h) {

30ms 30ms 67: i = h + 1 // preserves f(i-1) == false

. . 68: } else {

. . 69: j = h // preserves f(j) == true

. . 70: }

. . 71: }

. . 72: // i == j, f(i-1) == false, and f(j) (= f(i)) == true => answer is i.

40ms 40ms 73: return i

. . 74:}

. . 75:

The f(h) call (line 66) is consuming most of the time here. This is the callback function we passed in. At a guess the compiler can't inline the callback function and because there's so little else going on the function call overhead comes to dominate the time taken. As an experiment we can be our own optimizer and manually inline the search call function. This is basically a cut and paste job from sort.Search, replacing !f(h) with n.key[h] < k

func (n *nodeSorted) getInlinedBinSearch(k byte) interface{} {

// impl of sort.Search manually inlined here

i, j := 0, int(n.count)

for i < j {

h := int(uint(i+j) >> 1) // avoid overflow when computing h

// i ≤ h < j

if n.key[h] < k {

i = h + 1 // preserves f(i-1) == false

} else {

j = h // preserves f(j) == true

}

}

// i == j, f(i-1) == false, and f(j) (= f(i)) == true => answer is i.

if i < int(n.count) && n.key[i] == k {

return n.val[i]

}

return nil

}$ go test -bench .

Benchmark_Loop-8 11763114 101 ns/op

Benchmark_BinarySearch-8 4796678 246 ns/op

Benchmark_BinarySearchInlined-8 10631300 114 ns/op

PASSThat looks better, but surprisingly, still slower than the initial loop version. Lets grab another cpu profile and look at the getInlinedBinSearch function

$ go test -bench . -benchtime=10000000x -cpuprofile=cpu.prof

...

$ go tool pprof cpu.prof

...

(pprof) list getInlinedBinSearchROUTINE === github.com/superfell/loopVsBinarySearch.(*nodeSorted).getInlinedBinSearch in /Users/simon/Github/loopVsBinarySearch/n.go

660ms 680ms (flat, cum) 16.67% of Total

. . 178: return n.val[idx]

. . 179: }

. . 180: return nil

. . 181:}

. . 182:

40ms 40ms 183:func (n *nodeSorted) getInlinedBinSearch(k byte) interface{} {

. . 184: // impl of sort.Search manually inlined here

70ms 70ms 185: i, j := 0, int(n.count)

180ms 200ms 186: for i < j {

40ms 40ms 187: h := int(uint(i+j) >> 1) // avoid overflow when computing h

. . 188: // i ≤ h < j

70ms 70ms 189: if n.key[h] < k {

100ms 100ms 190: i = h + 1 // preserves f(i-1) == false

. . 191: } else {

. . 192: j = h // preserves f(j) == true

. . 193: }

. . 194: }

. . 195: // i == j, f(i-1) == false, and f(j) (= f(i)) == true => answer is i.

20ms 20ms 196: if i < int(n.count) && n.key[i] == k {

140ms 140ms 197: return n.val[i]

. . 198: }

. . 199: return nil

. . 200:}

. . 201:Comparing back to the profile of the original loop, this version spends less time comparing the key to the array entries. That makes sense, Thats the whole point of the binary search. The rest of the loop overhead though negates that though. Possibly at this point we're at the mercy of the CPU's branch predictor. If you know more about the relative overhead of the 2 loops, let me know

Is there anything else we can do to make this faster? In both cases the n.key[index] access comes with a bounds check. I vaguely remember reading somewhere about the compiler being able to optimize away some of the bounds checks, if it checks a high bounds first. Makes sense, if n[10] is not out of bounds of they array, then you already know that a subsequent n[9] call is also not out of bounds. Lets try reversing the order of our loop, see if that helps.

func (n *nodeLoop) getReverse(k byte) interface{} {

for i := n.count - 1; i >= 0; i-- {

if n.key[i] == k {

return n.val[i]

}

}

return nil

}$ go test -bench . -benchtime=10000000x -cpuprofile=cpu.prof

Benchmark_Loop-8 10000000 101 ns/op

Benchmark_LoopRev-8 10000000 108 ns/op

Benchmark_BinarySearch-8 10000000 248 ns/op

Benchmark_BinarySearchInlined-8 10000000 114 ns/opSlightly worse, but were those bounds checks skipped? I'd guess not given the runtime, how can we tell? As far as i know, at this point we have to look at the code generated by the compiler. We can get the compiler to give us an assembly dump of the generated code.

If you get this far, its time to make another cup of tea.

$ go tool compile -S n.go > n5.asmLook through the file and find the section for (*nodeLoop).getReverse. Now, I haven't done assembly since writing 68000 assembly for an Amiga A500. So after copious amounts of web searches, i've annotated the assembly with what (i think) is going on.

"".(*nodeLoop).getReverse STEXT nosplit size=125 args=0x20 locals=0x18

0x0000 00000 (n.go:39) TEXT "".(*nodeLoop).getReverse(SB), NOSPLIT|ABIInternal, $24-32

0x0000 00000 (n.go:39) SUBQ $24, SP

0x0004 00004 (n.go:39) MOVQ BP, 16(SP)

0x0009 00009 (n.go:39) LEAQ 16(SP), BP

0x000e 00014 (n.go:39) FUNCDATA $0, gclocals·4032f753396f2012ad1784f398b170f4(SB)

0x000e 00014 (n.go:39) FUNCDATA $1, gclocals·69c1753bd5f81501d95132d08af04464(SB)

0x000e 00014 (n.go:40) MOVQ "".n+32(SP), DX ;; register DX points to the start of the nodeLoop struct

;; in memory (the n variable in the code)

0x0013 00019 (n.go:40) MOVQ 272(DX), BX ;; copy the value from DX + 272 bytes into BX. 16 for n.keys,

;; 16 x 16 for n.vals get you 272, so BX has the value of the

;; count field

0x001a 00026 (n.go:40) DECQ BX ;; bx = bx-1. register bx is the loop count variable i

0x001d 00029 (n.go:40) MOVBLZX "".k+40(SP), SI ;; set register SI to the value of the parameter k

0x0022 00034 (n.go:40) JMP 39

0x0024 00036 (n.go:40) DECQ BX ;; i--

0x0027 00039 (n.go:40) TESTQ BX, BX ;; bitwise AND of BX and BX, a way to see if i == 0

0x002a 00042 (n.go:40) JLT 93 ;; if i < 0 goto 93 which will do the stack dance for the

;; return nil statement.

0x002c 00044 (n.go:41) CMPQ BX, $16 ;; cmp i to the literal value 16

0x0030 00048 (n.go:41) JCC 111 ;; if i >= 16 goto 111 which will generate an array index out

;; of bounds panic

0x0032 00050 (n.go:41) MOVBLZX (DX)(BX*1), DI ;; set register DI to n.keys[i]

0x0036 00054 (n.go:41) CMPB DIB, SIB

0x0039 00057 (n.go:41) JNE 36 ;; if n.keys[i] != k goto back to 36, which decrements the loop

;; counter and repeats the above tests

0x003b 00059 (n.go:42) SHLQ $4, BX ;; bit shift BX left 4, i = i * 16

;; remember that interface{} takes up 16 bytes

0x003f 00063 (n.go:42) MOVQ 16(DX)(BX*1), AX ;;

0x0044 00068 (n.go:42) MOVQ 24(DX)(BX*1), CX ;; ax & cx contain n.vals[i]

0x0049 00073 (n.go:42) MOVQ AX, "".~r1+48(SP) ;; shuffle them onto the stack for the return value

0x004e 00078 (n.go:42) MOVQ CX, "".~r1+56(SP)

0x0053 00083 (n.go:42) MOVQ 16(SP), BP

0x0058 00088 (n.go:42) ADDQ $24, SP

0x005c 00092 (n.go:42) RET ;; we're done

0x005d 00093 (n.go:45) XORPS X0, X0 ;; here to 110 is for the return nil

0x0060 00096 (n.go:45) MOVUPS X0, "".~r1+48(SP)

0x0065 00101 (n.go:45) MOVQ 16(SP), BP

0x006a 00106 (n.go:45) ADDQ $24, SP

0x006e 00110 (n.go:45) RET ;; we're done

0x006f 00111 (n.go:41) MOVQ BX, AX

0x0072 00114 (n.go:41) MOVL $16, CX

0x0077 00119 (n.go:41) PCDATA $1, $1

0x0077 00119 (n.go:41) CALL runtime.panicIndex(SB)

0x007c 00124 (n.go:41) XCHGL AX, AXThere's some pointer math going on to access the fields and array indexes in the nodeLoop struct. This struct is laid out in memory following the definition of the code.

| Byte Offset | Value |

|---|---|

| 0-15 | n.key[0] through n.key[15] |

| 16-31 | n.val[0] |

| 32-47 | n.val[1] |

| ... | ... |

| 256-271 | n.val[15] |

| 272-280 | n.count |

Code offsets 36 through 57 are the loop, and you can see that it checks BX which is the variable i against 16 every time around. So it has not optimized away any of the bounds checks. Lets see if we can do better at convincing the compiler about this. We'll access the largest array index from the loop before starting the loop.

func (n *nodeLoop) getReverse2(k byte) interface{} {

_ = n.key[n.count-1]

for i := n.count - 1; i >= 0; i-- {

if n.key[i] == k {

return n.val[i]

}

}

return nil

}$ go test -bench . -benchtime=10000000x -cpuprofile=cpu.prof

Benchmark_Loop-8 10000000 102 ns/op

Benchmark_LoopRev-8 10000000 108 ns/op

Benchmark_LoopRevOneBoundsCheck-8 10000000 82.4 ns/op

Benchmark_BinarySearch-8 10000000 250 ns/op

Benchmark_BinarySearchInlined-8 10000000 116 ns/opAlright, looks like we might of done it this time, that's a ~20% decrease in time. Lets double check the assembly that it is doing what we think.

"".(*nodeLoop).getReverse2 STEXT nosplit size=124 args=0x20 locals=0x18

0x0000 00000 (n.go:48) TEXT "".(*nodeLoop).getReverse2(SB), NOSPLIT|ABIInternal, $24-32

0x0000 00000 (n.go:48) SUBQ $24, SP

0x0004 00004 (n.go:48) MOVQ BP, 16(SP)

0x0009 00009 (n.go:48) LEAQ 16(SP), BP

0x000e 00014 (n.go:48) FUNCDATA $0, gclocals·4032f753396f2012ad1784f398b170f4(SB)

0x000e 00014 (n.go:48) FUNCDATA $1, gclocals·69c1753bd5f81501d95132d08af04464(SB)

0x000e 00014 (n.go:49) MOVQ "".n+32(SP), DX ;; register DX points to the start of the nodeLoop struct

0x0013 00019 (n.go:49) MOVQ 272(DX), BX ;; BX = n.count

0x001a 00026 (n.go:49) LEAQ -1(BX), AX ;; AX = BX-1 (I think, not sure)

0x001e 00030 (n.go:49) NOP

0x0020 00032 (n.go:49) CMPQ AX, $16

0x0024 00036 (n.go:49) JCC 113 ;; if AX >= 16 goto 113, which will generate a panic

0x0026 00038 (n.go:50) MOVBLZX "".k+40(SP), CX ;; CX = param k

0x002b 00043 (n.go:50) JMP 48

0x002d 00045 (n.go:50) DECQ AX ;; AX-- (AX is our loop variable i)

0x0030 00048 (n.go:50) TESTQ AX, AX

0x0033 00051 (n.go:50) JLT 95 ;; if i < 0 goto 95, which is return nil

0x0035 00053 (n.go:51) MOVBLZX (DX)(AX*1), BX ;; BX = n.keys[i]

0x0039 00057 (n.go:51) CMPB BL, CL

0x003b 00059 (n.go:51) JNE 45 ;; if n.keys[i] != k goto 45

0x003d 00061 (n.go:52) SHLQ $4, AX

0x0041 00065 (n.go:52) MOVQ 16(DX)(AX*1), CX ;; here through 94 are return n.vals[i]

0x0046 00070 (n.go:52) MOVQ 24(DX)(AX*1), AX

0x004b 00075 (n.go:52) MOVQ CX, "".~r1+48(SP)

0x0050 00080 (n.go:52) MOVQ AX, "".~r1+56(SP)

0x0055 00085 (n.go:52) MOVQ 16(SP), BP

0x005a 00090 (n.go:52) ADDQ $24, SP

0x005e 00094 (n.go:52) RET

0x005f 00095 (n.go:55) XORPS X0, X0

0x0062 00098 (n.go:55) MOVUPS X0, "".~r1+48(SP)

0x0067 00103 (n.go:55) MOVQ 16(SP), BP

0x006c 00108 (n.go:55) ADDQ $24, SP

0x0070 00112 (n.go:55) RET

0x0071 00113 (n.go:49) MOVL $16, CX ; array out of bounds panic

0x0076 00118 (n.go:49) PCDATA $1, $1

0x0076 00118 (n.go:49) CALL runtime.panicIndex(SB)

0x007b 00123 (n.go:49) XCHGL AX, AXNow the loop is between 45 to 59, and you can see that the bounds check (at 32/36) only occurs once.

We can add the same additional array check to the other methods and see if it helps those.

Benchmark_Loop-8 10000000 102 ns/op

Benchmark_LoopOneBoundsCheck-8 10000000 88.3 ns/op

Benchmark_LoopRev-8 10000000 113 ns/op

Benchmark_LoopRevOneBoundsCheck-8 10000000 82.1 ns/op

Benchmark_BinarySearch-8 10000000 250 ns/op

Benchmark_BinarySearchOneBoundsCheck-8 10000000 253 ns/op

Benchmark_BinarySearchInlined-8 10000000 114 ns/op

Benchmark_BinarySearchInlinedOneBoundsCheck-8 10000000 106 ns/opThat worked for the forward loop as well. Didn't work the for function based binary search. From the numbers its not clear what's going on with the inlined binary search versions. Looking at the assembly they both seem to have the same main loop, and do end up doing bounds checks each time. Why the last one is quicker I'm not sure.

For the loops, its interesting that with the bounds checks limited to one that reverse is faster, but with a bounds check each time, forward is faster. Comparing assembly again, the reverse with one bounds check uses TESTQ to compare to 0, and the forward one has to use CMPQ to test against n.count. Perhaps TESTQ is faster than CMPQ? Perhaps the branch predictor can predict the terminate on zero case for the reverse loop better than it can predict the terminate on n.count for the forward loop. If you have a more concrete explanation for the differences between these 2 I'd love to hear it.

Come back for episode 2 where we look at some more esoteric approaches to this array search problem.

Wednesday, October 21, 2020

Previously I'd extracted the sheared bolt from the 2nd piece of profile. 2 more to go. At this point, I've gotten to an approach that works reasonably well, counter sink the hole, use the M12 tap to tap the hole, then install the insert. The larger tap wrench and long handled wrench make this easier, but its still a workout. The main thing to watch is that the tap is kept square to the profile, and the insert is kept square while installing. I got the final 16 inserts installed into the last 2 pieces of profile and step 1 is done!.

There's a variation of the build that uses profile from Item24 rather than Kinetic. Having dealt with the thread inserts which was a giant PIA, I'd highly recommend the Item24 variation instead. The Item24 version uses an M10 thread in the profile and M10 bolts to secure the bearing mounts, no thread inserts involved. Also, Item24 can supply the profile with the threads already cut, vastly simplifying what is the most annoying step of the build.

Tuesday, October 20, 2020

The 3D printer is still whirring along, few days left there. The motors and drives turned up, so I did a sanity test on all them to make sure there's no issues. This was straightforward enough, wiring the motor to the driver, plug the encoder cable in, and wire power to the driver. Use the jog function to check that the motor spins. The opensfx docs cover this well.

Mains voltage can kill you! make sure you know what you're doing, or get help from a qualified electrician.

Saturday, October 10, 2020

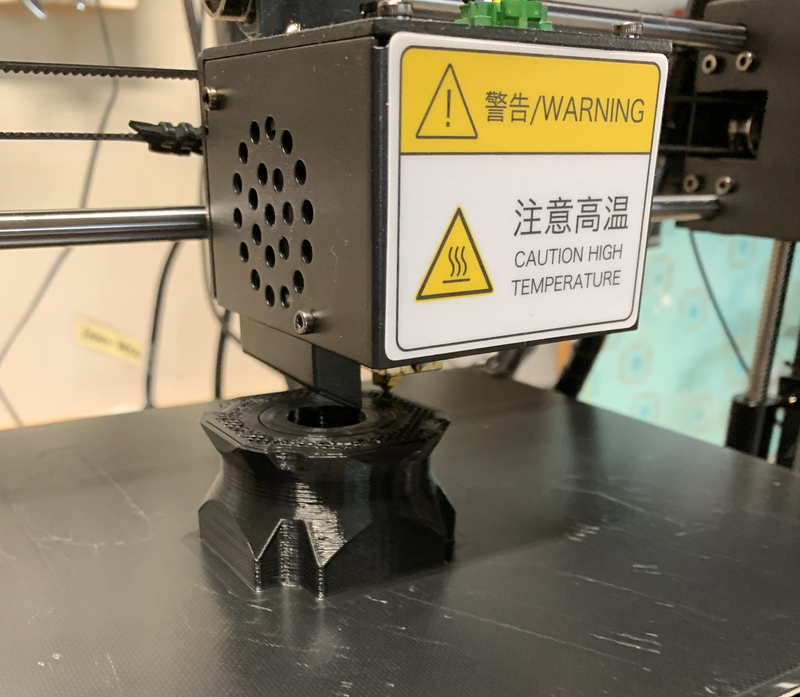

After i ordered the parts, I got started on the 3D printing, there's a lot of it. There's 5 parts to be printed for each actuator. One set of parts takes about 50 hours to print. You can get these printed via a print service. But now that printers are less than $300 it's more economical to buy a printer and print them yourself. Plus as a bonus you'll have a 3D printer at the end of it.

I went with the Anycubic i3 Mega S. It's easy to assemble, just 4 bolts and you're up and running. Getting the print bed level is a PIA, but that seems to be true for all the 3D printers. The Prusa i3 and Ender printers are also popular.

One of the first things i printed was a raspberry pi case/mount so that I could setup Octoprint. This lets you control and monitor your printer from a browser, great for keeping an eye on those 12 hour prints without going out to the garage every time. I use a Sony PS Eye webcam with mine that i picked up for $15. No need to spend more than that for the webcam.

Dimensional accuracy of the prints is important, especially for the slider. Its worth spending the time on some calibration prints first. You'll also want to play close attention to the print profile in the slicer software. The prints are not strong enough with the typical default profile. I setup a custom profile that follows the recommendations. If you're confident in the calibration then go ahead and print all 4 sets. I printed one set first and test fit it to the profile before starting to print the rest.

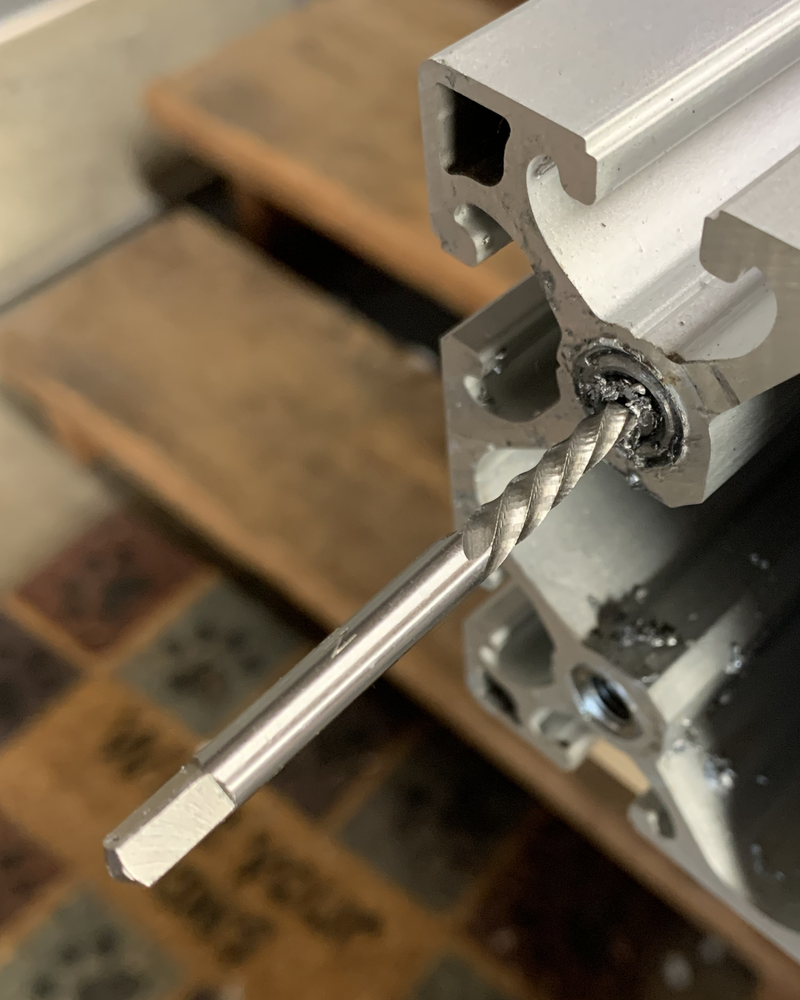

Tuesday, October 6, 2020

If you recall from the previous post I had a sheared bolt inside the thread insert. The bolt extractor tool turned up, so lets fix that!

Drill a hole in the center of the bolt, then tap the extractor into the hole.

Now turn the extractor anti-clockwise using a tap handle, and its out!

Crisis averted!, onward. On the remaining profiles I'm going to counter sink the hole a little before installing the thread inserts, this should make getting them flush easier.

Saturday, October 3, 2020

First step in the actuator build is to install the threaded inserts into the aluminum profile. 4 Profiles, 4 inserts per end, 32 total.

As mentioned in the ordering post, I brought my aluminum profile from a fellow iRacer. They didn't however have the matching thread inserts that kinetic sells. I wasn't able to find these from anywhere, and ended up with some different ones, just to keep things interesting.

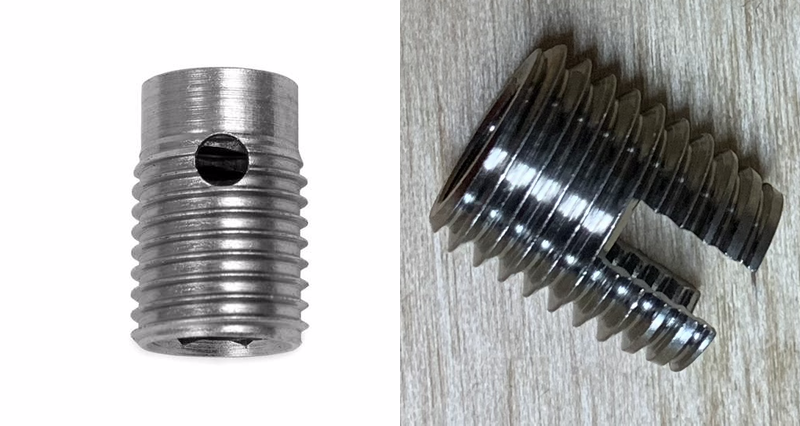

Kinetic insert left, inserts I ended up with on the right. Note that the Kinetic insert has a nice shoulder that helps keep things square when inserting them. Another nice thing about the Kinetic insert is that it has an internal hex, allowing it to be installed with an allen wrench. The info for my inserts mention a specific insertion tool, but i couldn't find anywhere selling them. The insertion tool though is basically a threaded shaft with a nut on it, I made my own with an M8 bolt and nut.

The inserts claim to be self tapping, but have no leading taper, making them very hard to get started. I ended up using a M12 tap in the profile to cut a starting thread that the insert can then use.

2 down, 30 to go, ughh, these are a lot of work. First tapping the aluminum, then the installing the insert. I tried using my battery impact driver, but it didn't have the torque needed to install the insert. One internet order later, i tried a larger AC powered impact driver. That had way to much torque, it mostly ended up stripping the threads on the bolt rather than inserting the insert.

One more internet order later i had a much longer wrench, and one with a built in ratchet end. This made installing the inserts by hand much less work. But that longer leverage means more torque, combine that with the damage done earlier with the impact driver and bingo, one snapped bolt, arrrrgggggghhh.

At this point I've spent a week on this and gotten a grand total of 7 installed inserts. One with a broken bolt stuck in it. One more internet order. when the bolt extract tool turns up this adventure will continue.

Thursday, October 1, 2020

The SFX-100 is an open source/DIY motion actuator for use with racing or flight simulators. I've been enjoying iRacing this year, and thought this would a fun project to work on.

Step 0 is to order everything, there's parts needed from a number of different suppliers, and some of these take weeks or even a month or two to be delivered. The original project parts list is heavy on european suppliers, reflecting where all the development was done. This post on Race Department details better options for US based folks.

I had no issues ordering from ntl bearing or Master Jiangs store on aliexpress, those parts turned up in a couple of weeks. ntl-bearings also now can supply the servo & drives, if you want to cut down the number of different places to order from.

The big lead time is the aluminum profiles from Kinetic, they're a B2B supplier and not set up for lots of little consumer orders. Reports are that it can take 1-2 months for those orders to turn up in the US. I lucked out, someone on the iRacing forums was selling a set of the profiles after decided not to do the build. I purchased those and had them in a week.

These are the main parts, the rest is a laundry list of bits and pieces, all easily available.

While waiting for everything to turn up, its a good time to get started on the 3D printing, there's a lot of it needed.

Saturday, January 19, 2019

I recently got a new power amp for my hifi setup, and it has a 12v trigger input. The trigger input allows for an external signal to turn the amplifer on or off. Its usually connected to a central item so that everything can turned on/off together. I also use Roon, which is a great network music player.

While remembering to turn the amp on is not a problem, remembering to turn it off can be a problem for various reasons. I thought it'd be a fun little project to use the Roon API to have the amp turn on automatically when roon starts playing, and to have it automatically turn off in the evening.

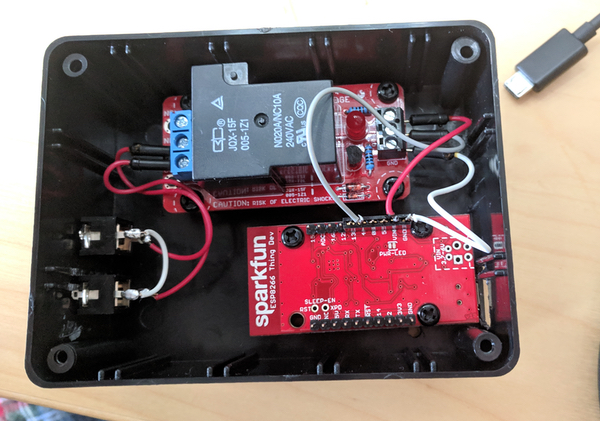

I hatched a plan, using the Sparkfun Thing board, this is a little Wifi enabled processor that has some basic I/O pins and can be programmed using the Arduino software.

- A node app using the roon api listens for state changes in a particular output zone.

- It sends a TCP message over Wifi to the Thing board which turns a relay on.

- The relay is inline in the trigger connection from the preamp to the power amp.

- At a particular time of day, the node app sends the message to turn the relay off.

The Hardware

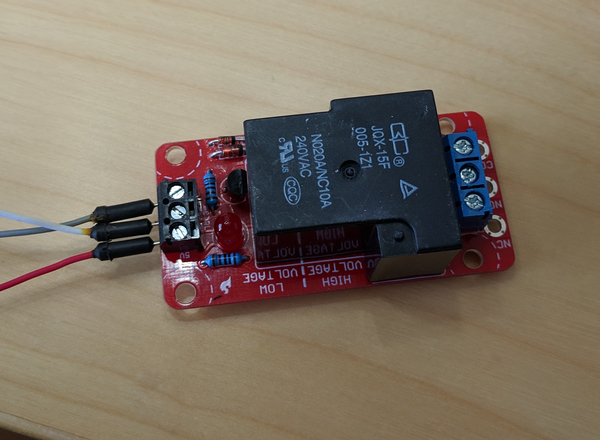

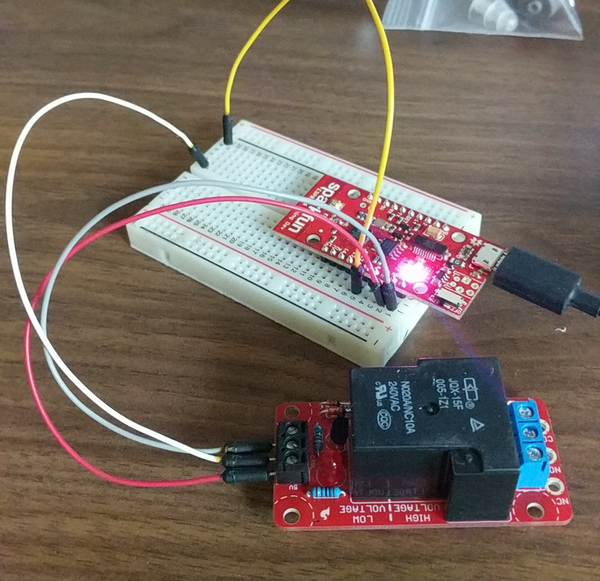

I used the Sparkfun Thing Dev Board and a Relay Kit. The actual relay in the kit is overkill for this use case of switching a low current low voltage signal, but it'll work fine.

First up, build the relay kit, the sparkfun instructions are clear & detailed, and its an easy

build for anyone who's used a soldering iron before.

Next up, I used a breadboard to connect the relay board to the Thing board, the Thing board conviently

has 5v & ground pins you can use to power the relay board, and I connected Thing's GPIO pin 4 to the control

input on the relay board.

After testing with the software, I installed the 2 boards in a little box and connected a pair of mono

3.5mm jacks to the relay switch.

Thing Software

You can program the Thing using the Arduino IDE over USB, simple!. What we need for this is to join the Wifi network, listen on a TCP port, read messages from a client and turn pin 4 on or off.

I used a simple 4 byte TCP message format that supported 3 request messages, STAT, UON & UOFF, which return the current state, turn the output on and turn the ouput off.

The core loop ends up looking like

void loop() {

// Check if a client has connected

WiFiClient client = server.available();

if (!client) {

return;

}

// Wait til there's a 4 byte message ready

while (client.available() < 4) {

delay(5);

}

uint8_t buff[4];

int x = client.read(buff, 4);

if (buff[0] == 'S' && buff[1] == 'T' && buff[2] == 'A' && buff[3] == 'T') {

int state = digitalRead(RELAY_PIN);

client.print(state == HIGH ?"ON \n": "OFF \n");

} else if (buff[0] == 'U' && buff[1] == 'O' && buff[2] == 'N') {

digitalWrite(RELAY_PIN, HIGH);

delay(1000);

client.print("ON \n");

} else if (buff[0] == 'U' && buff[1] == 'O' && buff[2] == 'F' && buff[3] == 'F') {

digitalWrite(RELAY_PIN, LOW);

delay(1000);

client.print("OFF \n");

}

client.flush();

client.stop();

}

Roon API Software

The Roon API ships as a node.js based library. Our code is pretty straightforward- Do a dance to connect to a Roon server.

- Listen for zone state changes for a particular zone.

- If the zone started playing send the 'UON ' message to the thing endpoint.

- At a certain time of day, send the 'UOFF' message to the thing endpoint.

var client = new net.Socket();

client.connect(triggerPort, triggerHost, function() {

// Write a message to the socket as soon as the client is connected, the server will receive it as message from the client

client.write(msg);

});

// Add a 'data' event handler for the client socket

// data is what the server sent to this socket

client.on('data', function(data) {

console.log('TRIGGER SAID: ' + data.slice(0,4));

// Close the client socket completely

client.destroy();

callback(data)

});

You need somewhere you can leave this running, I run it on the Raspberry Pi

I have that runs pihole.

Wrap-up

This was a fun little project, and a nice change of scenery from my day job. The Thing is a great little board for building integrations like this. The full code for both the Thing & the Roon integration is available on Github.Saturday, December 15, 2018

Site has moved to a new webhost and has been migrated from ASP.NET 1.1 (!) to being generated with Hugo. It now supports TLS & HTTP 2.0. Along the way I dropped the RSS & Atom feeds, nothing updates often enough that constantly polling is worth it.

Let me know if you see any problems. And yes this is my bi-annual blog post.

Saturday, December 8, 2018

Tuesday, January 12, 2016

2016 is the year you find out how well you understand all the integrations & API usage you have against the salesforce API. There are 2 changes being made in 2016 that will impact a lot of integrations & API clients.

API retirement for www.salesforce.com

www.salesforce.com hosted the original API login service, many years ago a dedicated login service was hosted at login.salesforce.com. Starting in 2016 the login service running at www.salesforce.com is going to be disabled..

Disablement of TLS 1.0

Support for TLS 1.0 is being disabled, this will affect many HTTPS client libraries, especially those that are not upto date, or don't get their TLS/SSL support directly from the host OS.

Impact for Beatbox

Beatbox is a python library i wrote for accessing the salesforce.com API. If you have integrations built using Beatbox you need to carefully review your python environment in order not to be impacted. Support for TLS 1.1 & TLS 1.2 requires python 2.7.9 (or later) and OpenSSL 1.0.1 (or later). If you're running on older versions that this, when TLS 1.0 is disabled, your integration will stop working!. Note that if you're on OS X, the bundled version of OpenSSL is 0.9.8 and needs to be upgraded to continue to work. There is more info in the readme about this. Beatbox was updated in 2010 to start using login.salesforce.com instead of www.salesforce.com for logins, so if you're beatbox version is older than that, you'll need to update to a new version (or update your usage to explicitly set serverUrl before calling login)Impact for ZKSforce

ZKSforce is a library for iOS & OSX to call the salesforce API. It gets its HTTPS support via the cocoa NSURLRequest class, which is part of the OS. All recent versions of iOS & OS X have support for TLS 1.2 and you shouldn't have any issues related to the TLS 1.0 disablement. However ZKSforce by default sends login requests to www.salesforce.com unless you have a version from Sept 2015 or later. You can either upgrade to the latest version of ZKSforce or explicitly set the auth server before calling login, e.g.[client setLoginProtocolAndHost:@"https://login.salesforce.com"]